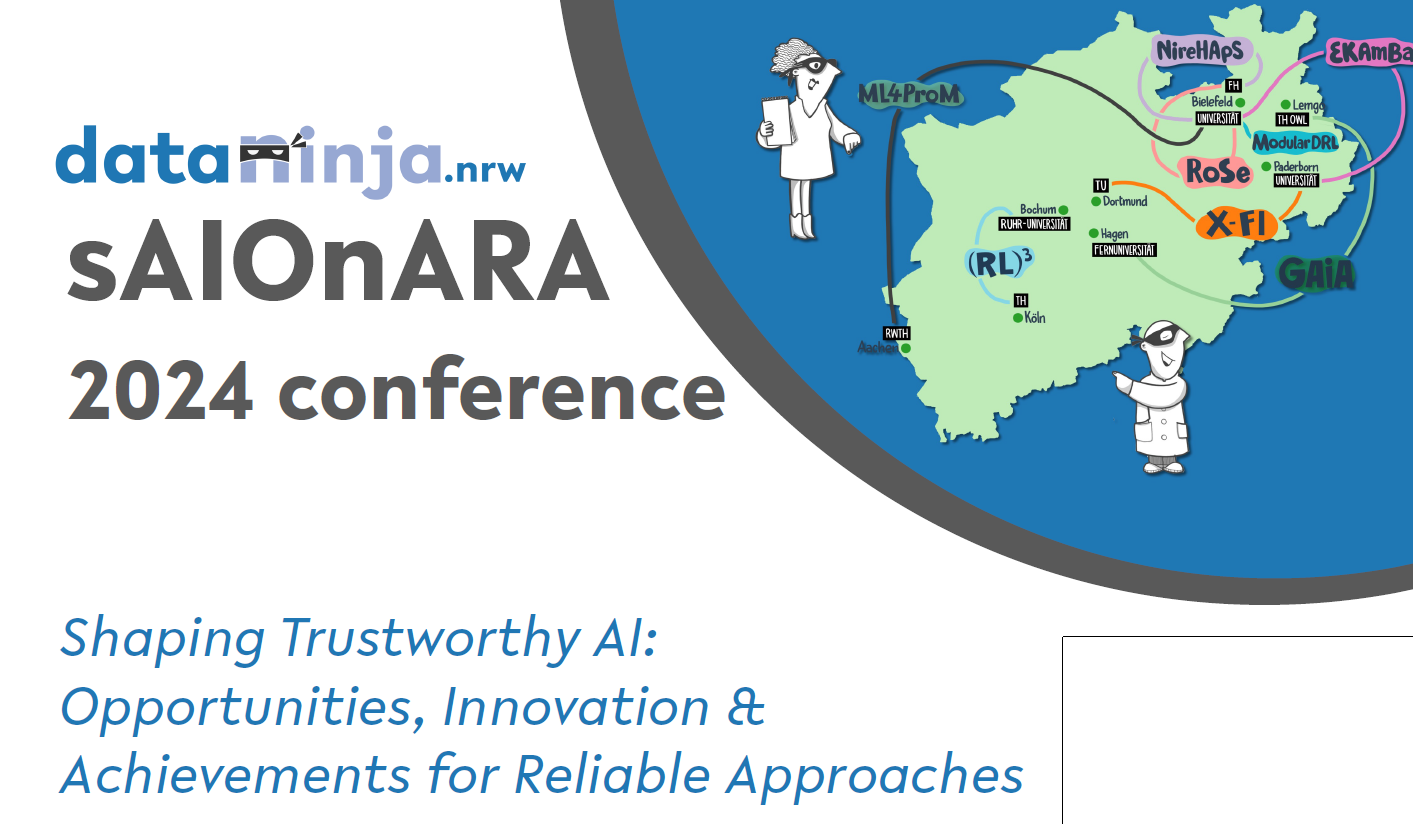

In June 2025, scientists from all over the world will meet on Sao Miguel, the main island of the Azores, to exchange scientific ideas. The research of the Gummersbach campus is attracting attention in this international circle, as Prof. Wolfgang Konen was invited by Don Jones, one of the co-organizers of the conference, to speak about his research area of constrained optimization as an invited speaker. This is a special honor, because normally one has to undergo a more or less extensive review process. Constrained optimization means that you not only want to minimize a target value (e.g. fuel consumption of a car), but also have to comply with one or more constraints (e.g. concerning the statics of the car). Years ago, Wolfgang Konen, together with Samineh Bagheri (former PhD student) and Prof. Thomas Bäck from Leiden University, developed the R package SACOBRA for constrained optimization, which is still one of the leading software packages in this field. A port of the R software to Python is currently in progress. The MOPTA conference in the Azores will also offer many opportunities for networking. Last but not least, the beautiful landscape of the Azores, which will also be visited on short excursions, will be inviting. So far for the simulation... The real world is generally more challenging. Thanks to a fruitful collaboration with Prof. Tichelmann's Lab of Applied Artificial Intelligence, we were now able to put the idea to the test on a real-world robotics task. The lab offers a real-world implementation of the cart pole swing-up environment, a well-known benchmark for control problems and reinforcement learning. A physical pendulum is attached to a cart via an unactuated hinge. Only by swift movements of the cart to the left or to the right, the pendulum is first to be swung up and then balanced in the unstable equilibrium. During a previous bachelor's thesis, a DQN could be trained to solve the challenge successfully. We now used this DRL agent as oracle for our experiment. While the additional challenges of a real-world experiment were noticeable, the algorithm proved its robustness and managed to find a DT on par with the DQN agent, while using fewer parameters. Further details can be found in our latest paper. A video shows the DT agent in operation. On July 17th, I presented my work "Exploring the Reliability of SHAP Values in Reinforcement Learning", co-authored by Dataninja colleague Moritz Lange and our supervisors Prof. Laurenz Wiskott and Prof. Wolfgang Konen. Experiencing the conference at Valletta's (Malta) impressive Mediterranean Conference Center, learning about the work of newly met people, and reconnecting with familiar members of the XAI community from last year, has definitely been a highlight of this summer. Now we already held the closing conference of the Dataninja project. From Tuesday 25th to Thursday 27th we had the pleasure to enjoy three days of science and meetups at Bielefeld University, the “headquarter” of Dataninja. The rich program consisted of keynote talks, poster sessions, and reports from our sibling project “KI starters”. The (RL)3 project of Moritz Lange and supervisor Prof. Wiskott from Ruhr-University Bochum and myself under the supervision of Prof. Konen from TH Köln, contributed with a short overview of our joint project and a more in-depth presentation of our most recent research in two poster contributions. Of special interest to our topics were the keynotes by Holger Hoos ("How and Why AI will shape the future"), Henning Wachsmuth ("LLM-based Argument Quality Improvement"), and Sebastian Trimpe ("Trustworthy AI for Physical Machines"). Many thanks to Prof. Barbara Hammer and her team (Dr. Ulrike Kuhl, Özlem Tan) from Bielefeld University for organizing and hosting such a fantastic event! As usual, it has been a very pleasant occasion to meet our fellow PhD candidates, and we have already made plans to meet up again, because the first ones are already on the home straight. Listening to Yann LeCun in person speak about the challenges of machine learning was inspiring and attending Moritz' presentation of our collaborative work "Interpretable Brain-Inspired Representations Improve RL Performance on Visual Navigation Tasks was a real pleasure. Besides many interesting talks (one of them by my colleague from the Dataninja research training group Patrick Kolpaczki presenting his work on approximating Shapley values), attending such a big conference was a memorable experience, as was exploring the nature surrounding Vancouver.

The work I presented is focused on using Shapley values for explainable reinforcement learning in multidimensional observation and action spaces, investigating questions about the reliability of approximation methods and the interpretation of feature importances. While Shapley values are a widely-used tool for machine learning, more work is required for its application to reinforcement learning. To those interested in Shapley values, I recommend to also take a look at the contribution of my Dataninja colleague Patrick Kolpaczki on improving approximation of Shapley values. The conference proceedings are already available as part of Springer's book series "Communications in Computer and Information Science".

CIOP News

The MOPTA conference (Modeling and Optimization: Theory and Applications, https://coral.ise.lehigh.edu/mopta2025/) has been around for many years, since 2001 to be precise. It usually takes place in America, but this year, in 2025, European and American scientists can meet in the middle, so to speak, because it is being held in the Azores in June 2025.

As mentioned in a previous blog post, we developed an iterative algorithm for training decision trees (DTs) from trained deep reinforcement learning (DRL) agents. The algorithm combines the simple structure of DTs and the predictive power of well-performing DRL agents. In our publication, we tested the idea on seven different control problems and successfully trained shallow DTs for each of these challenges, containing orders of magnitude fewer parameters than the DRL agents whose behavior they imitate.

After having participated in its debut last year, it was a special pleasure to visit the second edition of The World Conference on Explainable Artificial Intelligence (xAI2024). The conference was a full immersion into all aspects of explainable AI. The keynote speech by Prof. Fosca Giannotti about hybrid decision-making and the two panel discussions on legal requirements of XAI and XAI in finance broadened the views between detailed poster and presentation sessions.

Time flies... It wasn't that long ago (or at least it feels like it) that I wrote a blog post about the first Dataninja Retreat.

In the last week of February, my RL3 Dataninja colleague Moritz Lange and I had the chance to visit the AAAI conference on AI 2024.